One of the most common friction points in a post-procurement implementation is the "Data Discrepancy Crisis."

It usually happens three weeks after launch. A data analyst compares the conversion numbers in the new CRO tool against the company's system of record (usually Google Analytics or Adobe Analytics) and finds a 12% discrepancy.

Panic ensues. The validity of the tool is questioned. Engineering is blamed for a faulty integration. But in 95% of cases, nothing is broken. The discrepancy is not a bug; it is a feature of how different systems define "truth."

The Illusion of "Perfect" Data

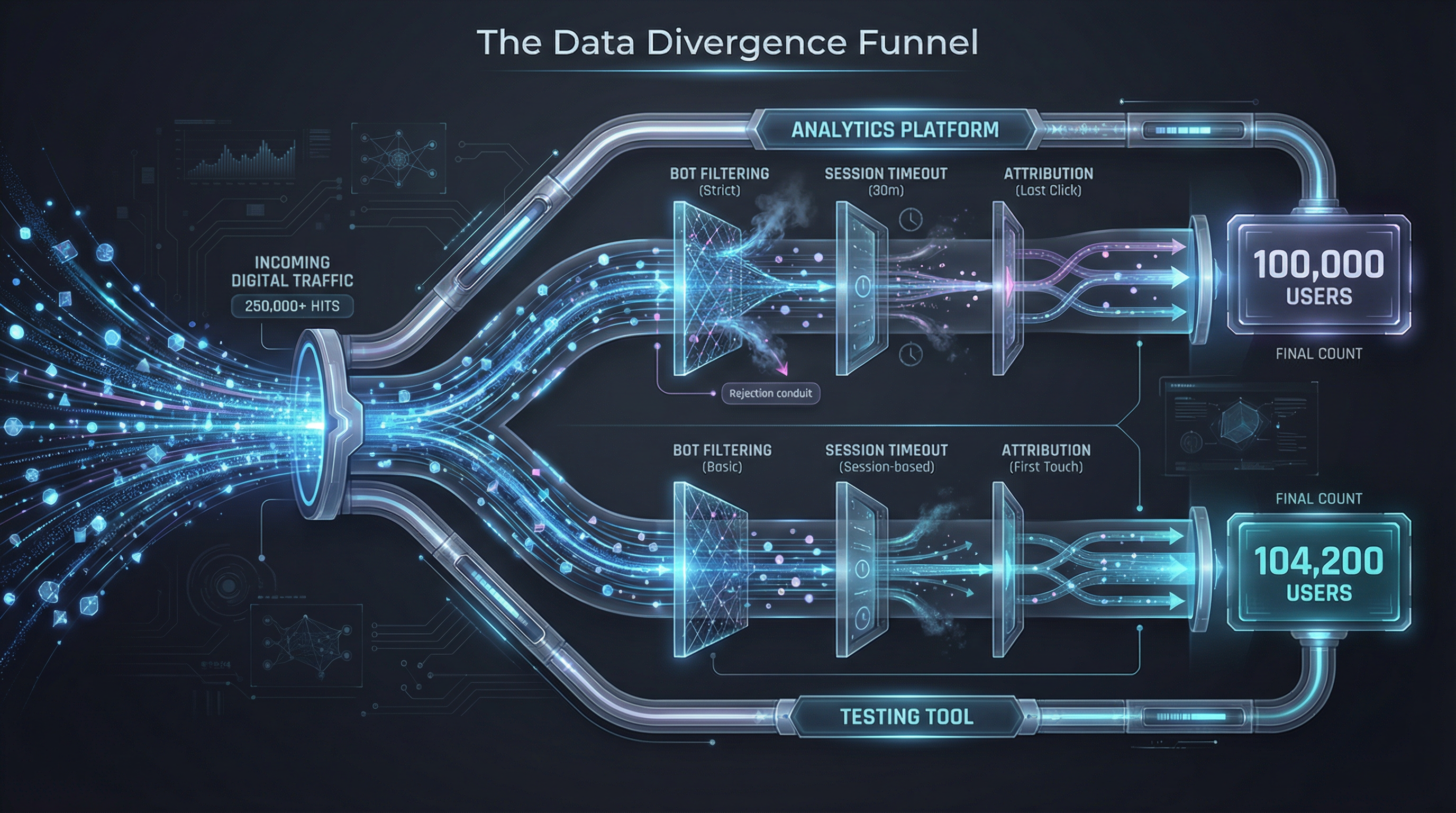

To understand why tools disagree, we must accept a hard reality: There is no such thing as objective web analytics. Every tool is an opinionated filter applied to a raw stream of network requests.

When a user visits your site, they generate a stream of events. Your Analytics Platform and your Testing Tool both drink from this same stream, but they filter it through fundamentally different logic processors.

The Three Drivers of Divergence

While there are dozens of technical reasons for data mismatch, three factors account for the vast majority of discrepancies.

1. Bot Filtering

Analytics tools (like GA4) use massive, global blacklists to aggressively filter "known bots." Testing tools often use lighter, session-based filtering to ensure real users aren't blocked from experiments.

2. Attribution

If a user visits via Email, leaves, and returns via Direct, GA4 might credit "Email" (Last Non-Direct Click). The testing tool, which only cares that they are in the experiment, counts the conversion immediately.

3. Latency

Testing tools must fire before the page renders to prevent flicker. Analytics tags often fire after load. Users who bounce instantly might be counted by the test but missed by analytics.

Defining "Acceptable Variance"

If perfect alignment is impossible, what should procurement teams write into their acceptance criteria?

The industry standard for "Acceptable Variance" between client-side tools is ±5% to 10%. If the discrepancy is consistent (e.g., the testing tool is always 8% higher than Analytics), the data is trustworthy. You are simply seeing the difference in filtering logic.

Red Flags (When to Worry):

- Variance > 15%: This suggests a genuine implementation error (e.g., tags firing in the wrong order).

- Inconsistent Variance: If the gap is +5% on Monday and -20% on Tuesday, the integration is unstable.

- Zero Variance: Paradoxically, if the numbers match exactly, it usually means someone has manually "cooked" the data or is sending server-side events that bypass the client entirely.

The Strategic Solution: One-Way Integration

Instead of trying to force two systems to agree, smart organizations adopt a "One-Way Integration" policy.

Do not use the testing tool's built-in analytics as your system of record for revenue. Instead, configure the testing tool to send an Event or Dimension to your Analytics platform (e.g., "Experiment: Checkout_Flow_V2").

This allows you to analyze the test results inside your Analytics platform. The raw user count may still differ, but the relative difference (the lift) will be calculated using the same "truth" logic as the rest of your business reporting.

Procurement Implications

Understanding data architecture is just one part of selecting the right stack. For a complete breakdown of how to evaluate tools based on their data handling and integration capabilities, consult our primary guide.

Read the Procurement Guide: Website Optimization & CRO Software